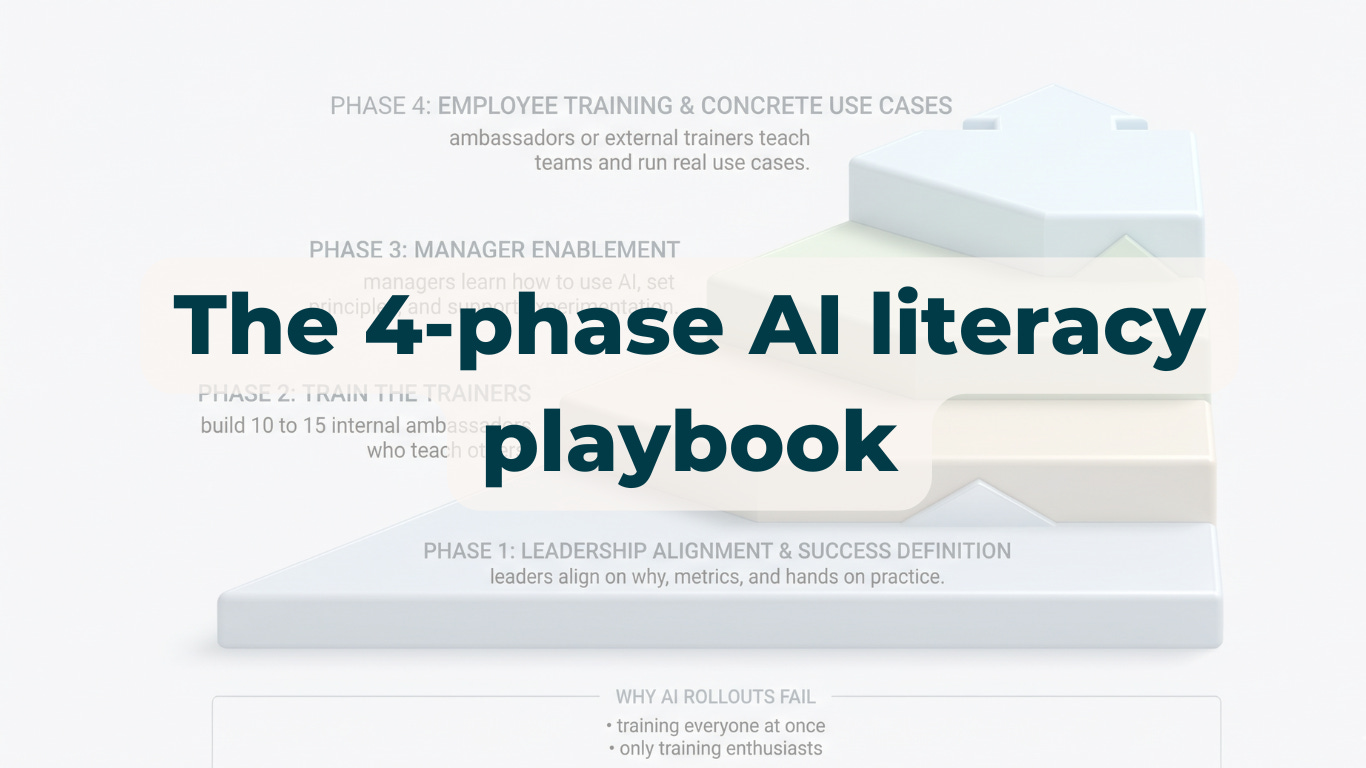

The 4-phase AI literacy playbook

Most AI rollouts fail. Here's the structure that doesn't.

Welcome to FullStack HR, and an extra welcome to the 48 people who have signed up since last week.If you haven’t yet subscribed, join the 10100+ smart, curious leaders by subscribing here:

Happy Tuesday!

Tomorrow is THE day. We’ll host our AI Day. 500 people in the audience and great speakers at the stage. It will be all about AI adoption.

So I’ve been thinking a lot about this lately.

Not just theoretically, because I’ve spent the autumn working with several organizations on exactly this. And if there’s one thing I’ve learned, it’s that AI adoption is an investment. A real one. Leadership time. Manager time. Space for people to experiment. There are no shortcuts.

So today I’m sharing the playbook I’ve been using. Feel free to steal it and make it your own.

And let me know, if it would be helpful if I dropped the actual workshop PowerPoints as well? Happy to share if there’s interest, let me know in the comments if that’s the case.

Now, let’s get into it.

If you want different results, you need to do things differently.

I know. Groundbreaking stuff. But I keep having the same conversation with organizations: “We trained everyone on AI and nothing changed.”

Then I ask what they did, and it’s the same approach they use for everything else. A one-day workshop. An email with links to tutorials. Maybe a lunch-and-learn.

And they’re surprised when adoption flatlines after two weeks.

I’ve spent the autumn working with several organizations on AI implementation. Not theoretical frameworks but actual rollouts. What I’m sharing here is the structure that’s working. It’s not complicated. But it requires investment.

Time, not just budget.

And I’m not the only one seeing this. McKinsey’s latest research puts it bluntly: organizations spend 93% of their AI budget on data, tech and infrastructure and only 7% on people-related issues like training, workflow redesign, and change management. That ratio is backwards.

Why most AI rollouts fail before they start

Most organizations do one of two things:

They train everyone at once. A big “AI inspiration session” where 200 people sit through the same AI-hype-talk. Two weeks later, maybe 10% are still using what they learned. McKinsey found that 70% of employees simply ignore onboarding videos and formal courses - they rely on trial-and-error and peer discussions instead.

The fix isn’t complicated. But it requires doing things in the right order.

And it requires accepting that this takes time.

Phase 1: Leadership alignment and defining success

This is where everyone wants to skip ahead.

“We already know AI is important, let’s just start training people.”

No.

If your leadership team doesn’t have a shared understanding of why you’re doing this and what success looks like, you’ll hit resistance the moment someone asks “but what’s the ROI?” or “why are we doing this?”

Here’s what this phase needs to include:

A leadership workshop where executives get hands-on with the tools. Not a demo. Not a presentation. Actual exercises where they experience what AI can and can’t do. Most executives I work with are surprised by both the capabilities and the limitations.

(and yes, I talk about this in the AI adoption series)

Then you need to define what success looks like. What will you measure? Usage statistics alone won’t tell you much someone can use Copilot daily and still not create any value. You need to connect AI adoption to something that matters to your business. Time saved on specific tasks. Quality improvements. Capacity freed up for higher-value work.

The companies getting this right are treating it as a leadership priority, not an IT project. Moderna, for instance, merged their HR and IT departments under a single executive specifically to align talent strategy with AI transformation. That’s a signal about how seriously they’re taking this.

Without clear metrics and leadership buy-in, you’re just hoping people find useful applications on their own. Some will. Most won’t.

Phase 2: Train the trainers (your AI ambassadors)

Here’s the thing about AI training: external consultants don’t scale. (And I’m saying this as an external consultant who does AI trainings…great business model to say “pls don’t give me a lot of work”.)

But I can train 30 people in a workshop. Maybe 50 if I push it. But if you have 500+ employees, the math doesn’t work. And even if it did, one-off training doesn’t stick.

What works instead? Building an internal group of 10-15 people who become your AI ambassadors.

People who:

Are curious about AI (they don’t need to be experts)

Have credibility with their colleagues

Represent different parts of the organization

These people get intensive training. Not just “here’s how to use Copilot” but “here’s how to teach others, here’s how to handle resistance, here’s how to run a workshop.”

This usually takes 3-4 sessions over several weeks. With practice between sessions. And individual support when they get stuck.

The goal isn’t to make them AI experts. It’s to make them confident enough to help their colleagues experiment.

Done right, you now have 10-15 people who can train 20-50 people each in every session. That’s how you scale this effectively.

Phase 3: Manager enablement

I’ve seen this go wrong so many times.

A team member gets excited about AI. They start using it for reports, emails, analysis. Their manager notices and says “I don’t think we should be using that for client work” or “how do I know the AI isn’t making things up?”

Game over.

If managers don’t understand AI, they’ll block it. Not out of malice, out of legitimate concern about quality, accuracy, and risk.

So before you train all employees, you train managers. Specifically on:

How they can use AI in their own work (they need to experience the value firsthand)

How to set principles for AI use in their team (not banning it, but guiding it)

How to encourage experimentation without losing quality control

Harvard Business Review’s research confirms what I’ve seen in practice: leaders must be able to show - not just tell - how AI makes work better. And middle managers often matter more than the C-suite for driving actual adoption.

Phase 4: Employee training and concrete use cases

Now - and only now - you train the broader organization.

You can either have your ambassadors run the training. They know the organization, they speak the language, they’ll be there for follow-up questions. The downside: it’s slower, and they have day jobs.

Or you can, of course, bring in external people like me who does the training. It’s faster, but you lose the internal connection. And the moment the external person leaves, so does the momentum.

My recommendation is, once again, to have ambassadors do it whenever possible. The relationship matters more than perfect delivery.

The other part of this phase is picking one or two concrete use cases and implementing AI in them properly. Document what works. Create a case study your organization can learn from.

This matters because abstract training (”here’s what AI can do”) loses to concrete examples. P&G ran a study with Harvard Business School where teams using AI were 12% faster - but more importantly, AI helped break down silos between R&D and marketing by acting as a translator between disciplines. That’s the kind of specific, measurable outcome that builds internal momentum.

This requires investment

I’ve said it before but I’ll say it again, this isn’t a quick fix.

You’re looking at 3-4 months minimum to do this properly. Leadership time in the early phases. Ambassador time for training. Manager time for enablement workshops.

And crucially: time for employees to experiment.

The organizations that succeed at this don’t just give people access to tools - they create space for people to learn.

Spotify opened up their traditional engineering Hack Week to all 7,000 employees specifically for AI experimentation. The principle is the same: dedicated time to play and learn, not just another task on top of an already full workload.

Kellanova (formerly Kellogg’s) took a similar approach with their “Kuriosity Clinics” - voluntary sessions where employees experiment with AI in a safe environment. Over 10,000 employees participated. The key insight from both these examples is building a culture of curiosity rather than a culture of compliance. Which once again, requires a time investment (and a budget investment as well).

And I get why organizations want to shortcut it. Training budgets are tight. We usually don’t have a lot of time at our hands in our daily jobs. Everyone’s busy.

“Can’t we just send everyone a link to some tutorials?”

You can. But you’ll get the same results you’ve always gotten from that approach.

(Hint: not much will happen…)

Here’s what happens when you skip steps:

Skip Phase 1, and you’ll have managers asking “why are we doing this?” halfway through rollout. You’ll spend twice as long justifying the investment as you would have spent doing the alignment work.

Skip Phase 2, and you’re dependent on external consultants forever. Your internal capability never builds. The moment budget tightens, adoption stalls.

Skip Phase 3, and your enthusiastic employees hit a wall of manager skepticism. “My boss doesn’t get it” becomes the reason nothing changes.

Skip the concrete use cases in Phase 4, and you have no proof that any of this works. Just vibes and hope.

McKinsey’s State of AI report shows that while 88% of organizations use AI, only 6% are high performers. The difference isn’t the technology. It’s whether organizations treat this as a change management challenge or just a tech rollout.

This is just the foundation

I want to be clear about something: what I’ve described here is AI literacy. It’s the baseline. It’s getting your organization to the point where people can use AI tools competently and confidently.

It’s not the end goal.

If you believe AI will fundamentally change how work gets done - if you’re planning for a future where AI agents handle significant parts of workflows, where roles get redesigned, where competitive advantage comes from how well you integrate AI into your operations - then this playbook is just step one.

I wrote about the different scenarios for AI’s impact a while back. If you’re planning for scenario 2 or higher, you need this foundation in place first. You can’t redesign workflows with AI if your managers don’t understand what AI can do. You can’t build AI-augmented teams if your people are afraid of the technology.

This playbook builds that foundation. What you do after that depends on how transformative you believe AI will be for your business.

How to know if you’re ready for this

This approach isn’t for everyone.

It’s for organizations that believe AI is strategically important - not just a nice-to-have.

It’s for leadership teams willing to invest time in the early phases, not just budget.

It’s for companies that want to build internal capability, not just check a training box.

If that’s not where you are right now, that’s fine. But then don’t be surprised when adoption stalls. And don’t blame the technology.

If you want different results, you need to do things differently. That’s it. That’s the whole insight.

What does your organization’s AI implementation look like right now?

I’m curious whether you’re seeing similar patterns.