Is the EU AI Act killing HR's AI future?

A run down on what the EU AI Act means for HR.

Welcome to FullStack HR, and an extra welcome to the 43 people who have signed up since last week. If you haven’t yet subscribed, join the 7300+ smart, curious HR folks by subscribing here:

Happy Friday,

With a slight delay, I will try to make a podcast about all the topics I post here. And not a podcast where I read the article from top to end, but more giving life to it.

(Whatever that is.)

I’ll also cross-post it so you can find it on your preferred platform.

As I’m writing this late, late on Thursday, and I’m taking a day off when you read this, the podcast version of the article will be published early next week.

(It makes total sense all of this, right?)

I’ll try to post them a bit closer together in the future.

(Also, we have a handful of spots left on the big AI & HR Course we run this fall.)

Anyhow, today’s article is about regulations, not regulations in general, but more specifically, the EU AI Act.

I’ve been trying my best to follow along with the details surrounding the EU AI Act, which, in all honesty, has been a bit of a hassle and has led me to read more websites from the European Parliament than is probably healthy, but here we are.

Let’s get to it.

EU AI Act is here

As of August 1st, the EU AI Act (officially known as Regulation (EU) 2024/1689) is the law of the land - or at least it’s decided that it should be the law of the land.

According to lawmakers, this is the first-ever comprehensive legal framework for AI.

The act isn't just about setting rules; the EU aims to ensure AI is safe, trustworthy, and ethically sound. We also aspire to position Europe as a leader in AI development. (We’ll get back to my thoughts on this one…)

Why we in HR should care.

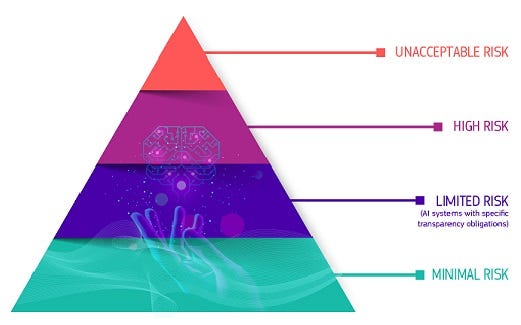

Now, you might be thinking, "Great, another regulation. How does this affect me?" Well, let me tell you—it affects us in HR quite a bit. The basics of it are that the AI Act categorizes AI systems into four risk levels, and many of our HR tools fall into these categories.

The categories are:

Unacceptable Risk: These are the no-go zones. The Act bans AI systems that are considered a clear threat to people's safety, livelihoods, and rights. Think of social scoring by governments or AI in toys that encourage dangerous behavior.

It's not really our domain in HR, but it's good to know.High Risk: This is where most of our HR tools land.

The Act specifically mentions AI used in "employment, management of workers and access to self-employment (e.g. CV-sorting software for recruitment procedures)" as high-risk. These systems need to meet strict requirements around transparency, traceability, and human oversight.Limited Risk: This category introduces specific transparency obligations. For instance, chatbots need to make it clear they're AI, not human. Also, AI-generated content must be identifiable. Imagine using an AI to draft job descriptions – we'd need to disclose that.

Minimal or No Risk: The Act allows free use of these applications, which could include things like AI-enabled scheduling tools or simple data analysis in HR.

The Non-Negotiable: Transparency

I have my objections about the EU AI Act (and yes, I promise we’ll get to them shortly), but I do agree with the intent and use of transparency.

If we're using AI in HR—whether for hiring, performance reviews, or anything else—we need to be upfront about it.

The Act requires "clear and adequate information to the deployer" for high-risk AI systems. For us in HR, this means we need to understand how our AI tools work and be able to explain them to our employees and candidates.

Yes, candidates also need to know if we are going to use AI in the vetting process.

One idea here could be to create a dedicated section on your HR webpage where employees can learn about the AI tools you're using. Make it easy for them to understand what's happening behind the scenes. This will go a long way toward creating a culture of openness and accountability, and it is also a good way to show that you are an innovative organization that utilizes AI.

What else do we need to do?

With the AI Act now in effect, here are some actionable steps you can take:

Inventory your AI tools: Map out all the AI systems your organization uses. Identify which ones are considered high risk under the EU AI Act.

Talk to your vendors: Have open, candid conversations with your AI vendors. Ask them how their systems comply with the new regulations, especially around data quality, risk assessment, and human oversight. They might not know this yet, but by asking these questions now, you’ll be better off in the future.

Update your policies: Make sure your HR policies reflect these new regulations. This might involve updating data protection protocols and setting up clear procedures for handling AI-related decisions.

Prepare for compliance: The Act will be fully applicable in 2 years, with some exceptions. Start preparing now to ensure you're ready when the time comes. (Hey, hey, GDRP-debacle incoming again?)

Invest in AI literacy: Ensure that your HR team and broader organization understand the basics of AI and its implications. This knowledge will be crucial for making informed decisions about AI adoption and use.

Do I like this or not?

As you might have guessed, I’m a bit torn about the EU AI Act, and it’s been hard to grasp what it really means. So, late this summer, I tried putting a true effort into understanding it and its quirks and kinks. To understand its implications, and the more I read, the more I came to be critical.

While the EU AI Act is a step forward in ensuring safe and ethical AI, from my point of view, there's a real risk that Europe could start lagging in the global AI race.

And this isn't just about missing out on cool new tech – it's about potentially losing our competitive edge in a rapidly evolving global economy.

The Act does try to address this by reducing administrative and financial burdens for businesses, especially SMEs. It's part of a wider package, including the AI Innovation Package and the Coordinated Plan on AI. But will it be enough?

I'm not so sure, and here's why:

Overregulation could stifle innovation: We're already seeing signs of this. For instance, Apple recently announced it's withholding certain AI features from EU users due to concerns about compliance with the Digital Markets Act. This kind of self-censoring by tech giants could become more common, leaving European users with less advanced tools compared to their global counterparts.

Global competitiveness: In a world where AI is increasingly becoming a key driver of productivity and innovation, falling behind in AI adoption and development could have far-reaching consequences for Europe's global competitiveness. This could affect not just the tech sector, but industries across the board, including manufacturing, healthcare, and finance etc.

Potential exodus of tech companies: The EU's regulatory approach, particularly the threat of fines up to 10% of global annual turnover, could make the European market less attractive for tech companies. While established players like Meta or Apple might stay due to their existing user base, we might see fewer new entrants willing to navigate the complex regulatory landscape.

Impact on AI research and development: Strict regulations could potentially discourage companies from conducting cutting-edge AI research and development in Europe. If companies perceive the regulatory environment as too restrictive or unpredictable, they might choose to focus their R&D efforts in other regions with more favorable conditions.

HR Tech implications: For us in HR, this isn't just about missing out on the latest chatbot or predictive analytics tool. AI has the potential to revolutionize how we manage talent, engage employees, and make strategic decisions. If European companies have less access to or are more hesitant to adopt advanced AI tools due to regulatory concerns, it could impact everything from recruitment processes to employee development programs.

The challenge for us in the EU is to find a balance between protecting citizens' rights and fostering innovation. While the AI Act aims to do this, there's a risk that in trying to lead the world in regulation, the EU might inadvertently hinder its ability to innovate.

We in HR need to stay informed and adaptable. While complying with regulations is crucial, we also need to be proactive in seeking out and adopting AI solutions that can enhance our work and not let the EU AI Act scare us too much or stifle internal innovation at our organizations.

The Road Ahead

The EU AI Act is undoubtedly a landmark piece of legislation, but its success will ultimately be judged not just by how well it protects citizens but also by how well it enables European businesses to compete on the global stage.

As HR professionals, we need to be part of this conversation, ensuring that our needs for innovative, powerful AI tools are heard while championing ethical and responsible AI use.

We're at a crucial juncture where the decisions made now will shape the future of work, innovation, and global competitiveness.

It's an exciting time, but one that requires careful navigation.

I think staying informed and adaptable is key. The AI landscape is evolving rapidly, and so too will the regulatory environment.

By staying engaged with these issues, we can help ensure that HR remains at the forefront of innovation while also upholding the ethical standards that are so crucial to our profession.