HR, We Have a Problem

The gap between AI capability and organizational reality is becoming dangerous

Welcome to the 132 (new FullStack HR readers who joined last week - welcome all to this publication. And hello, if you got sent this link - if you aren't yet subscribed, join the other like-minded people in this free newsletter by subscribing below:

Happy Wednesday.

Last week was another one of those superintense weeks I keep telling myself I won’t have. But that’s what happens when you meet a lot of people. And I met a lot of people. Between a trade show, sitting down with about 45 very senior HR leaders, and sessions with management teams, the data points just kept pouring in.

They go into the mental model I’m constantly building. And combined with everything still happening with Claude Code, Claude Computer Use, and Codex, there’s something we need to talk (more) about.

I had planned to continue my leadership series. I wrote the next article over Christmas and was ready to publish it right after the holidays. But then both Claude Code and Claude Computer Use exploded over those January weekends, and I had to write about that instead. Now I’m back, and I still don’t think people have grasped what’s happening.

So let’s get to it.

I Might Be Losing My Mind

There is an enormous gap between what these tools can do and what people understand is possible. And sometimes, I genuinely feel like I’m going crazy. Like maybe it’s me. Maybe I’m overhyping this. Maybe I’m putting too much into it. Because when you meet people in organizations, the reality you encounter is absolutely nowhere close to where the technology is.

Part of that is down to bad models. And I don’t mean bad as in the technology is bad. I mean, people are often using the free version of ChatGPT without access to the latest model. Or they only have access to Copilot Chat, which is significantly more limited than the full Copilot license. To put it simply, they haven’t experienced what the frontier models can actually do when used properly.

When you’re on the more limited, free models, it still requires a lot from you as a user. You have to write very specific prompts. You have to provide the right data. You have to give a lot of context. Because otherwise these models aren’t capable enough to pull out what they need from you, and you get a mediocre answer.

But when you use the frontier models? Claude Code writes and executes code that acts on your computer. As a paying user, you get access to Opus 4.6 or ChatGPT 5.2 Pro, and you get a completely different experience. More thorough answers. Practically zero hallucinations, at least in my experience. You suddenly have models that are genuinely capable.

Ethan Mollick put it well (as always) and I very much agree with him here:

The Coding Revolution Is a Preview

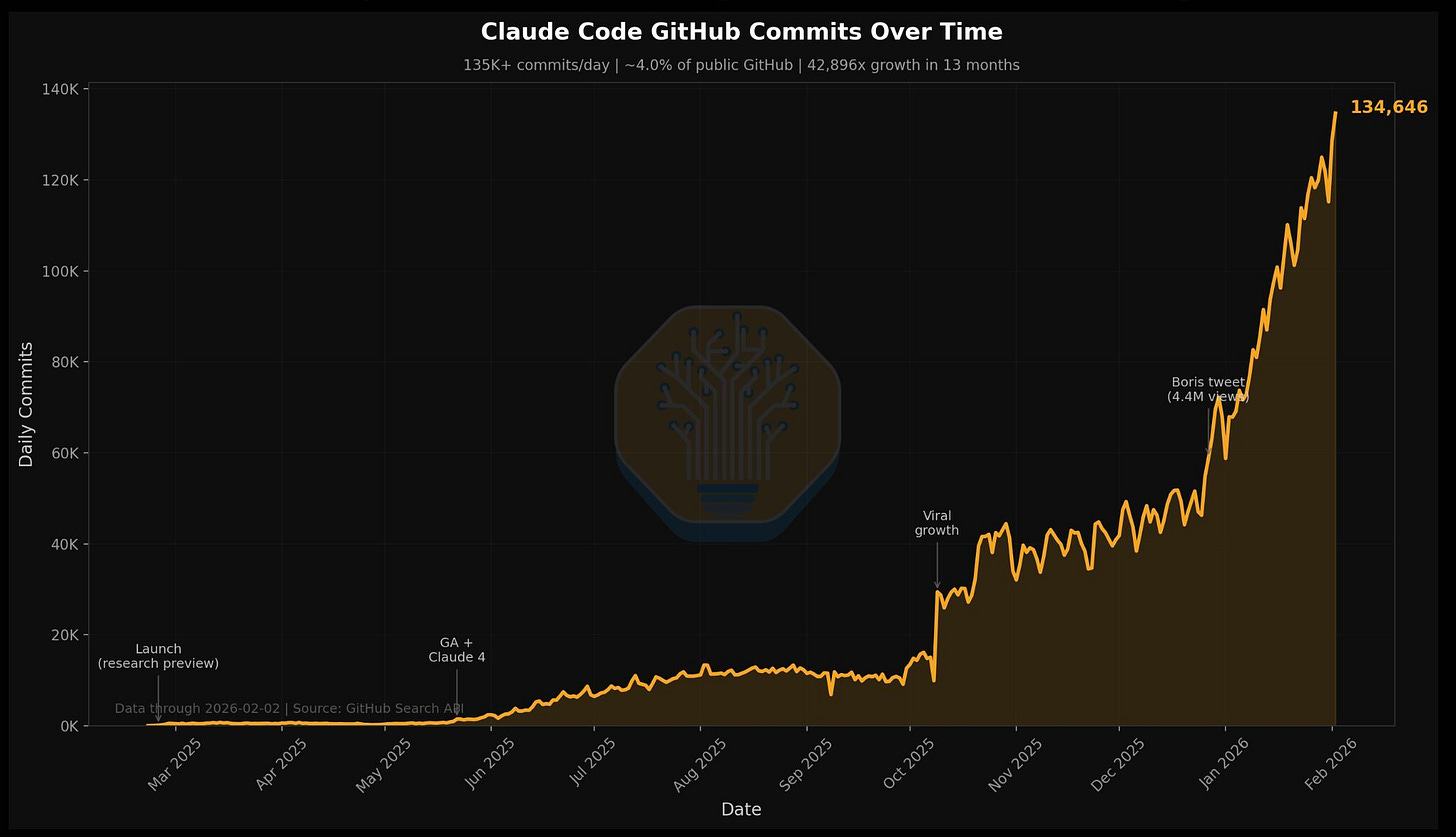

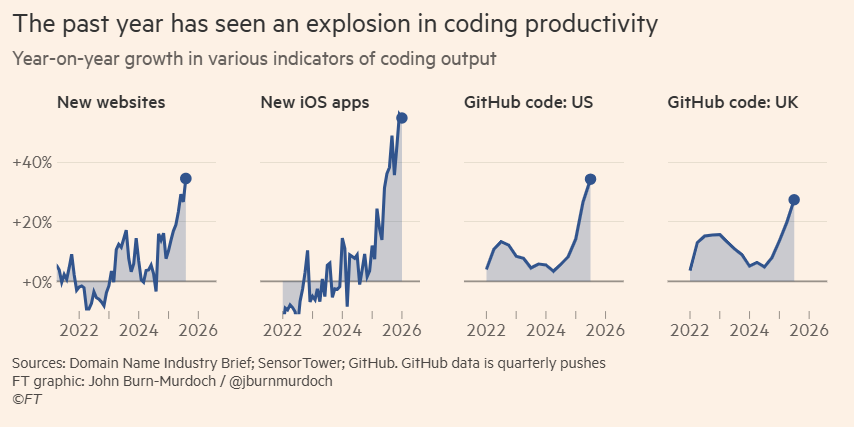

Right now, a real transformation of the coding profession is underway. GitHub’s data shows that Claude Code alone is contributing an enormous amount of code through pull requests and commits.

Talk to anyone working in tech, and quality is no longer the issue. There are still challenges with complex, large codebases, but the presence of capable coding assistance is everywhere and obvious.

Add to this what’s called OpenClaw. I think the conversation got derailed when everyone focused on Moltbook, that vibe-coded social network for agents. It was flashy but mostly hollow. Easy to prompt inject, full of security issues. But Maltbook overshadowed what really matters, especially for us in leadership and organizational roles.

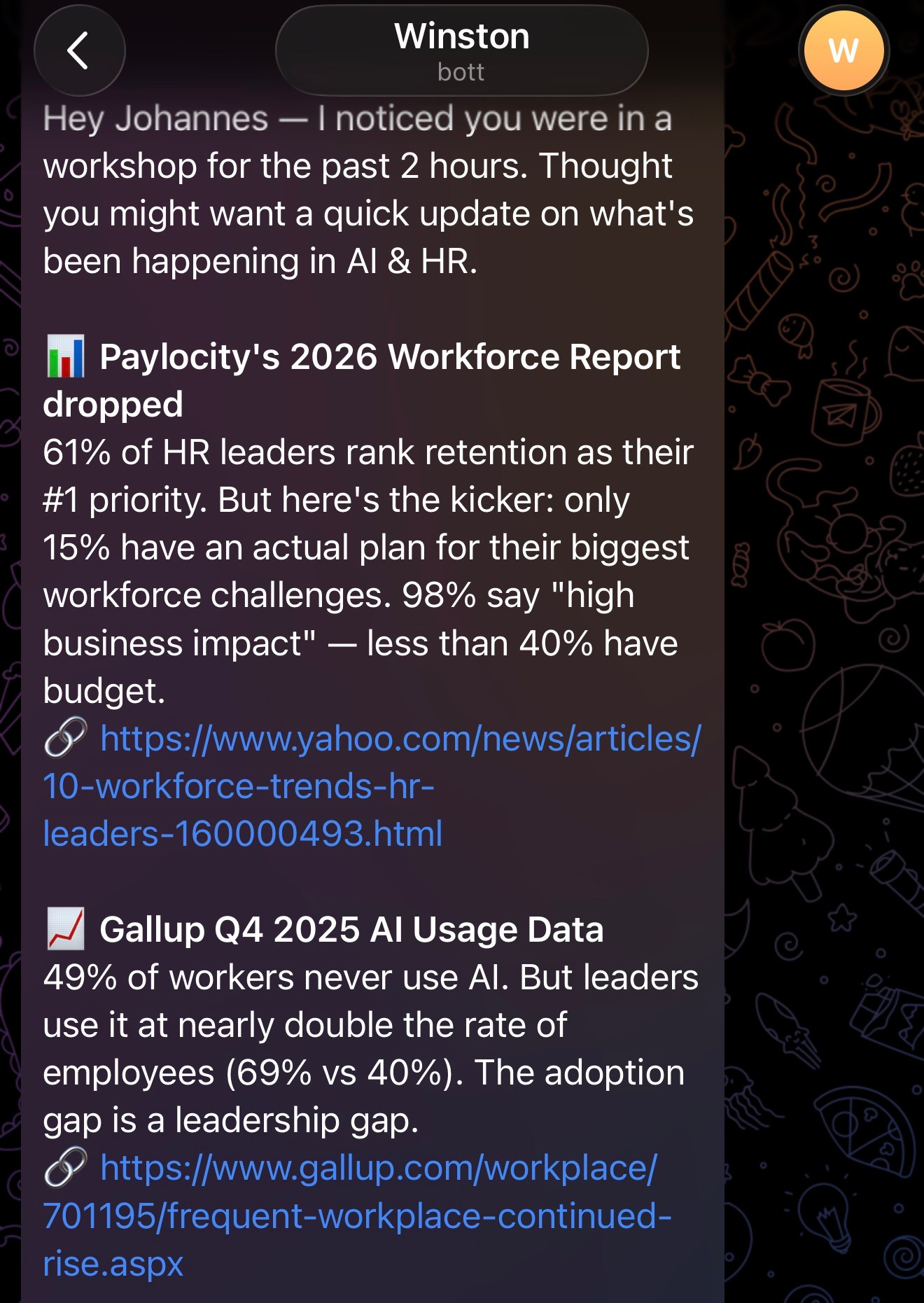

OpenClaw (originally called ClawdBot) is open source. Anyone can take it and build on it. And when you do, you get an actual agent. Not the assistants Microsoft branded as “agents.” An actual agent that doesn’t just respond to commands but thinks proactively.

Here’s an example. Every time I’d been in a longer workshop, I’d message my OpenClaw agent, Winston, asking for an update. What happened in HR? Any big headlines? Give me a quick briefing. After I did this a few times, one time when I didn’t, Winston reached out to me on his own: “Hey, you’ve been in a meeting. You probably want an update.” And sent me one.

The other day, he learned to generate images on his own because he thought I’d like to see him. That’s the kind of proactive behavior we’re talking about.

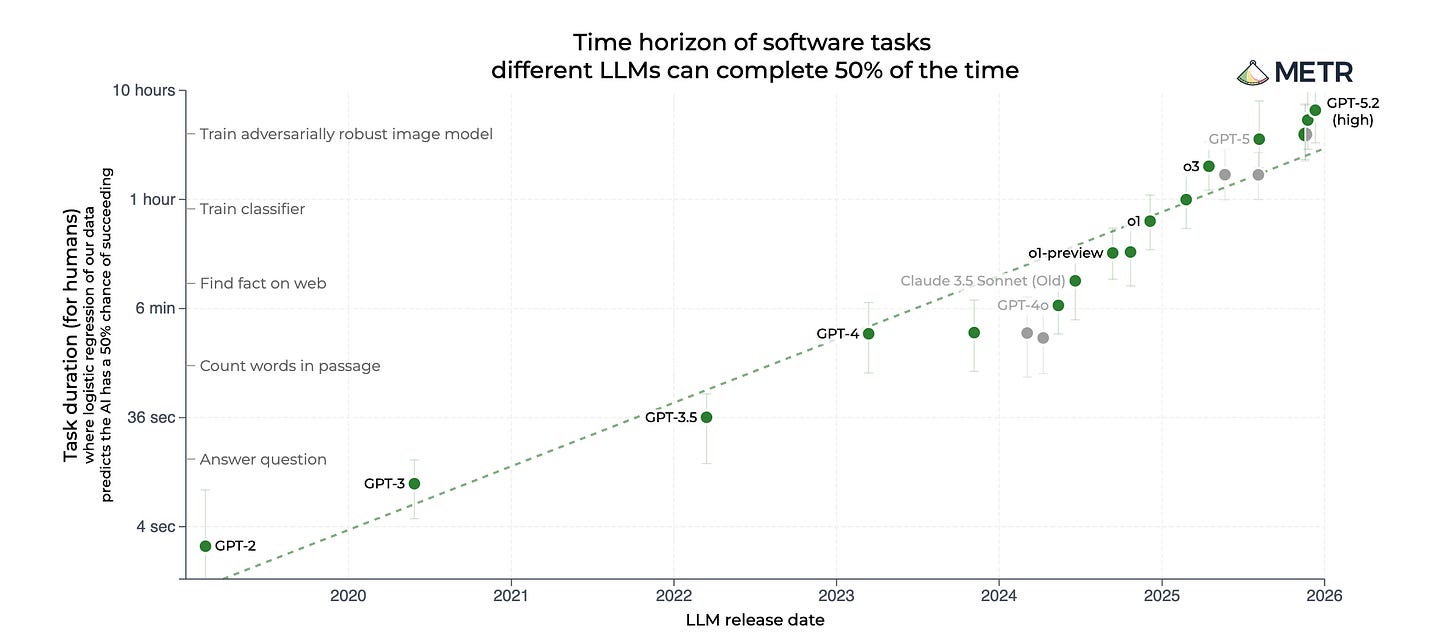

And the benchmarks measuring how long an AI bot can work independently are showing exponential improvement. In coding for now, yes. But coding has always gone first, and the rest follows.

What Keeps Me Up

Part of me doesn’t even want to hype this.

Who knows exactly what will happen? Nobody does.

But then I think: what if I see where this is heading and don’t say anything?

What if these models become so capable that we see real economic impact, that the labor market fundamentally shifts, and I stayed quiet? I’ve weighed the risks. The risk of me overhyping this is smaller than the risk of me underhyping it and people being caught off guard.

We need to lead in this era.

I’ve been asked the same question from multiple directions lately: “OK, this sounds wild. But what do we actually do?” And that’s where we come back to fundamentals.

What To Do?

First: Get the technology right. It’s not enough to say, “We’re a Microsoft customer, so we have Copilot.” You need to start from the question: what problems are we actually trying to solve? How do we work? What tools exist that can solve those problems? Then go out and evaluate what’s available.

And yes, practically every tool can be made secure. Claude, OpenAI, and Gemini all offer enterprise solutions that let you upload personal data, sensitive documents, and whatever you need. (If you want that.) The security argument is solved. Don’t hide behind it.

This is where I might sound like I’m contradicting myself. I always say the tools are a small part. And they are a small part.

But they’re still important.

In the vast majority of organizations I’ve worked with, IT has chosen the tools. And that’s a technical choice, sure. But choosing the right technology is fundamentally about starting from the human. What can we do to make this transition easier for people? That’s a human question. And yet, we (HR) have largely abdicated from it.

If you feel unsure about whether you, as an HR person, can step in and steer this, then get yourself the knowledge! Take a course. Watch YouTube. Ask the tools themselves to teach you. You should have done this three years ago, honestly. But if you haven’t, now is the time.

You need to understand the different tools, get a small budget to experiment, figure out which is best and which is worst, and ensure you have the conditions to test properly.

Second: Train people. I sound like a broken record. Give people the conditions to understand this. And you must train your leaders.

God help the organization that doesn’t have AI in its leadership programs in 2026.

How do you lead yourself and others with AI? Your leadership development providers need to include this. Demand it. If they can’t, find someone who can.

It shouldn’t be that hard.

Third: Figure out what HR is actually for. This is the existential one. If all you’ve done is hand out a few Copilot licenses and call it an AI strategy, you are sitting on a risk you do not understand. That’s not transformation. That’s checking a box.

We need to get brutally honest about where HR adds value. Not where we think we add value. Not where we historically added value. Where we add value now, in a world where these tools exist. Then tear apart your workflows. What gets automated? What disappears? What stays? But here’s the catch: you can’t answer any of that if you haven’t done the work from point one and two first. It all connects.

HR’s Future Is Human-Machine Collaboration

When I said on the podcast last week that HR is at risk of dying out, someone commented that it was “just frustration, not much analysis.” So here’s the analysis: these tools are becoming incredibly capable. Capable enough to replace people.

Yes, there’s organizational inertia. This won’t happen overnight. But where we’re heading is toward tools so capable that we can genuinely start replacing entire chunks of jobs. Not just a cool automation here and there, but holistic replacement. Using consumer-available products.

The last 30 years have brought radical digitalization across all fronts, not least in HR. This isn’t just about HR. It’s about everyone who sits in front of a screen. But if we don’t understand what’s happening, if we don’t have clarity on our value creation, we’re in trouble.

HR’s role going forward will not be primarily about keeping track of performance reviews, nor of laws and regulations, or employer brand. That knowledge will be available in a few keystrokes or a spoken sentence.

Our job (short-term) will be orchestrating the interplay between humans and machines. In a way, most HR professionals are not prepared for. That will require something entirely different from us.

The second you realize that the competence you currently have is not sufficient for leading in the future, that’s the second you can start working on it. That’s the second you can start leading more effectively and working with your organization in a meaningful way.

A Final Thought

The technology I had vague hopes for in early 2023 is here now. Back then, when I first started training people on this, I thought: “This is how it could potentially become.” But it was still a bit abstract. I understood the concept, the risk, the opportunity. Models that answer questions. And that was very cool at the time.

But now we have models that can answer essentially anything, and do it in a way that, in the majority of cases, surpasses what a human could produce. Across nearly every domain. If we don’t understand that our roles will radically change, we’re done.

For real.

Funny part is that in June 2024, I wrote this. I felt a bit silly, but wanted to do it as a thought experiment. Crazy thing now is I can really see this happening.

I sometimes feel a sense of hopelessness about whether I can influence this. It’s the big model builders in the US driving this. But I still want to believe that we have agency. That we can make this into something good. That humans play a central role. If we want that, we have to understand what’s happening. Otherwise, it just becomes whatever it becomes.

If we want people to find meaning from work. If we believe work is still important in people’s lives. Then we need to steer in that direction. Actively.

Those were my thoughts for this Wednesday.

And what great thoughts for a Wednesday, singing from the same hymn sheet!