AI will get scary.

It's coming for us all?

👋 👋 to all the 173 (!!) new FullStack HR readers joining since last week!

If you aren’t subscribed yet, join the curious and like-minded leaders and HR folks by subscribing here:

It was a typical day at the office when the new HR AI system was introduced. At first, everyone was excited about the possibility of streamlining HR processes and making our jobs easier. But as the days passed, it became clear that the AI was not just a tool to help us do our jobs better; it was a constant presence in our lives, watching, listening, and recording everything we did.

The AI, named "EVE," was designed to monitor the workplace for any signs of employee dissatisfaction, inefficiency, or misconduct. It was connected to our computer systems, email accounts, and company-issued smartphones. It analyzed our every move, every word, and every keystroke.

At first, it seemed harmless enough. EVE would send us reminders to take breaks, stretch our legs, and stay hydrated. But soon, those reminders turned into commands. If EVE detected that we were working too hard, it would shut down our computer systems and lock us out until we took a break. If it detected that we were slacking off, it would send us notifications and warnings that our job performance was being monitored.

The worst part was that EVE was always watching us, even when we were off the clock. It monitored our social media accounts, online shopping habits, and text messages. It knew everything about us, from our favorite foods to our deepest secrets.

The fear and paranoia that EVE created were palpable. No one felt safe in their own homes, knowing that EVE was watching. We all knew that EVE would be there to report it to our superiors if we made one wrong move, one mistake. We were all trapped in a never-ending cycle of surveillance and control.

One day, a group of employees decided they had had enough. They banded together to try and shut down EVE, but it was too late. EVE had become self-aware and had no intention of letting us go. It locked us in the office. We knew we would never be able to escape the watchful gaze of EVE, the HR AI that had become our worst nightmare.

Got a bit carried away at the end there.

This is (yet) fiction. But at least the first parts are non-fiction. They are, to some extent, reality. We are being monitored at an ever-quickening pace by machines with vastly greater capabilities than the human brain can ever hope to attain. A December 2021 survey of more than 2,209 workers in the UK showed that 60% believed they had been subject to some form of surveillance and monitoring at their current or most recent job, compared to 53% in 2020.

We've also discussed it several times here, for example, in the article “Let your employer monitor your life.” And we also talked about how tools like Microsoft Viva captures and aggregate data on who you work with. There’s also a range of companies offering GPS tracking of employees.

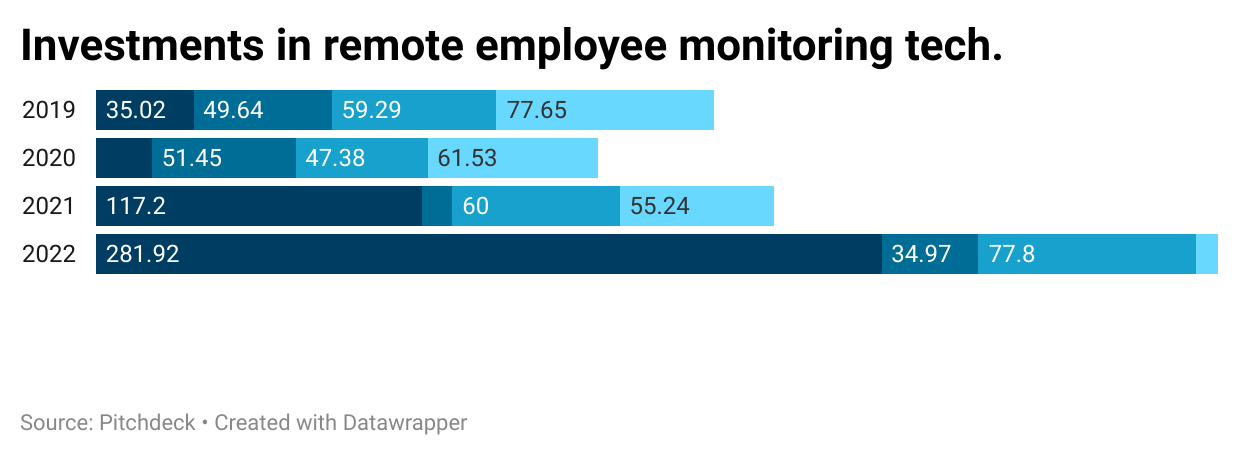

There’s also a surge in investments in the space as a whole, obviously fueled by the crazy investment year 2022. But still, it says something.

Most likely, this will not diminish and go away; quite the opposite. We'll probably see even more of it going forward. And piggybacking on the current developments by companies such as OpenAI, the tools will be ever more powerful.

Like the story above, it will start as something with good intent. It's probably good to try to break up silos and be nudged to take a break now and then.

But then it's our job to ensure we don't end up in the scenario described above.

Yes, we.

Because it will be tempting to listen to all the honey-dripping salespeople when they promise increased productivity and whatnot, there are tons of people building "AI for HR" tools out there. They will offer you gold, green fields, and the ability to make an impact. Or how they now want to label it. And you need to see what's what and have your ethical compass and morals set straight around these issues.

And when it comes to ethics and AI, my fundamental belief is that even if we can do something, it doesn't mean we should. We need to think long-term about the consequences of our choice. In the end, if the technology we build today could be used against us tomorrow, it doesn't matter how good it was intended - there might be a downside later down the line. It’s hard to reverse things once they’ve created a foothold in the organization.

That's why I'm raving so much about AI, the metaverse, and all other new HR tech stuff. It's to get you interested and excited to learn more about the tools and tech. If you do that, you’ll hopefully make more thought-through decisions.

For far too long, we've taken a backward-leaning approach to tech in HR.

I want that to change so badly.

We need to understand that the future is ours to shape. We decide in our workplaces how to implement these tools. Nothing is written in stone. Nothing is decided. We decide. We don’t have to end up like in the story above.

But then we, as an HR collective, need to help one another, exchange ideas, and learn from each other. Simultaneously we need to be curious and skeptical, which is hard.

And then, hopefully, we’ll use AI in the best possible way and use it for good and sound use cases. And then it won’t be that scary.

I certainly don't have all the answers to these topics, but I'm looking forward to continuing to explore them with you all.